As a pervasive systems research community we’re doing quite well at automatically identifying simple things happening in the world. What is the state of the art, and what are the next steps? Pervasive computing is about letting computers see and respond to human activity. In healthcare applications, for example, this might involve monitoring an elderly person in their home and checking for signs of normality: doors opening, the fridge being accessed, the toilet flushing, the bedroom lights going on and off at the right times, and so on. A collection of simple sensors can provide the raw observational data, and we can monitor this stream of data for the “expected” behaviours. If we don’t see them — no movement for over two hours during daytime, for example — then we can sound an alarm and alert a carer. Done correctly this sort of system can literally be a life-saver, but also makes all the difference for people with degenerative illnesses living in their own homes. The science behind these systems is often referred to as activity and situation recognition, both of which are forms of context fusion. To deal with these in the wrong order: context fusion is the ability to take several streams of raw sensor (and other) data and use it to make inferences; activity recognition is the detection of simple actions in this data stream (lifting a cup, chopping with a knife); and situation recognition is the semantic interpretation of a high-level process (making tea, watching television, in a medical emergency). Having identified the situation we can then provide an appropriate behaviour for the system, which might involve changing the way the space is configured (dimming the lights, turning down the sound volume), providing information (“here’s the recipe you chose for tonight”) or taking some external action (calling for help). This sort of context-aware behaviour is the overall goal. The state of the art in context fusion uses some sort of uncertain reasoning including machine learning and other techniques that are broadly in the domain of artificial intelligence. These are typically more complicated than the complex event processing techniques used in financial systems and the like, because they have to deal with significant noise in the data stream. (Ye, Dobson and McKeever. Situation recognition techniques in pervasive computing: a review. Pervasive and Mobile Computing. 2011.) The results are rather mixed, with a typical technique (a naive Bayesian classifier, for example) being able to identify some situations well and others far more poorly: there doesn’t seem to be a uniformly “good” technique yet. Despite this we can now achieve 60-80% accuracy (by F-measure, a unified measure of the “goodness” of a classification technique) on simple activities and situations. That sounds good, but the next steps are going to be far harder. To see what the nest step is, consider that most of the systems explored have been evaluated under laboratory conditions. These allow fine control over the environment — and that’s precisely the problem. The next challenges for situation recognition come directly from the loss of control of what’s being observed. Let’s break down what needs to happen. Firstly, we need to be able to describe situations in a way that lets us capture human processes. This is easy to do to another human but tricky to a computer: the precision with which we need to express computational tasks gets in the way. For example we might describe the process of making lunch as retrieving the bread from the cupboard, the cheese and butter from the fridge, a plate from the rack, and then spreading the butter, cutting the cheese, and assembling the sandwich. That’s a good enough description for a human, but most of the time isn’t exactly what happens. One might retrieve the elements in a different order, or start making the sandwich (get the bread, spread the butter) only to remember that you forgot the filling, and therefore go back to get the cheese, then re-start assembling the sandwich, and so forth. The point is that this isn’t programming: people don’t do what you expect them to do, and there are so many variations to the basic process that they seem to defy capture — although no human observer would have the slightest difficulty in classifying what they were seeing. A first challenge is therefore a way of expressing the real-world processes and situations we want to recognise in a way that’s robust to the things people actually do. (Incidentally, this way of thinking about situations shows that it’s the dual of traditional workflow. In a workflow system you specify a process and force the human agents to comply; in a situation description the humans do what they do and the computers try to keep up.) The second challenge is that, even when captured, situations don’t occur in isolation. We might define a second situation to control what happens when the person answers the phone: quiet the TV and stereo, maybe. But this situation could be happening at the same time as the lunch-making situation and will inter-penetrate with it. There are dozens of possible interactions: one might pause lunch to deal with the phone call, or one might continue making the sandwich while chatting, or some other combination. Again, fine for a human observer. But a computer trying to make sense of these happenings only has a limited sensor-driven view, and has to try to associate events with interpretations without knowing what it’s seeing ahead of time. The fact that many things can happen simultaneously enormously complicates the challenge of identifying what’s going on robustly, damaging what is often already quite a tenuous process. We therefore need techniques for describing situation compositions and interactions on top of the basic descriptions of the processes themselves. The third challenge is also one of interaction, but this time involving multiple people. One person might be making lunch whilst another watches television, then the phone rings and one of them answers, realises the call is for the other, and passes it over. So as well as interpenetration we now have multiple agents generating sensor events, perhaps without being able to determine exactly which person caused which event. (A motion sensor sees movement: it doesn’t see who’s moving, and the individuals may not be tagged in such a way that they can be identified or even differentiated between.) Real spaces involve multiple people, and this may place limits on the behaviours we can demonstrate. But at the very least we need to be able to describe processes involving multiple agents and to support simultaneous situations in the same or different populations. So for me the next challenges of situation recognition boil down to how we describe what we’re expecting to observe in a way that reflects noise, complexity and concurrency of real-world conditions. Once we have these we can explore and improve the techniques we use to map from sensor data (itself noisy and hard to program with) to identified situations, and thence to behaviour. In many ways this is a real-world version of the concurrency theories and process algebras that were developed to describe concurrent computing processes: process languages brought into the real world, perhaps. This is the approach we’re taking in a European-funded research project, SAPERE, in which we’re hoping to understand how to engineer smart, context-aware systems on large and flexible scales.

On a whim yesterday I installed a new operating system on a old netbook. Looking at it makes me realise what we can do for the next wave of computing devices, even for things that are notionally “real” computers.

I’ve been hearing about Jolicloud for a while. It’s been positioning itself as an operating system for devices that will be internet-connected most of the time: netbooks at the moment, moving to Android phones and tablets. It also claims to be ideal for recycling old machines, so I decided to install it on an Asus Eee 901 I had lying around.

The process of installation turns out to be ridiculously easy: download a CD image, download a USB-stick-creator utility, install the image on the stick and re-boot. Jolicloud runs off the stick while you try it out; you can keep it this way and leave the host machine untouched, or run the installer from inside Jolicloud to install it as the only OS or to dual-boot alongside whatever you’ve already got. This got my attention straight away: Jolicloud isn’t demanding, and doesn’t require you to do things in a particular way or order.

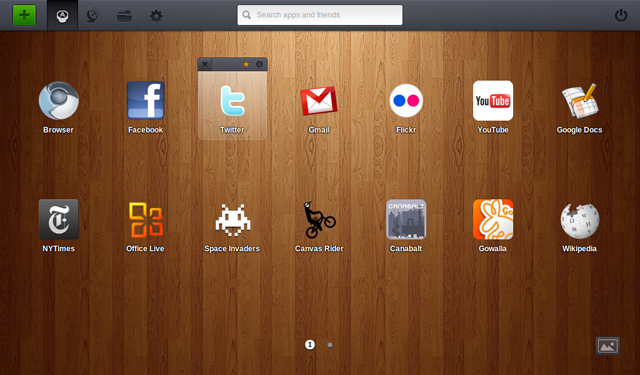

Once running (after a very fast boot sequence) Jolicloud presents a single-window start-up screen with a selection of apps to select. A lot of the usual suspects are there — a web browser (Google Chrome), Facebook, Twitter, Gmail, a weather application and so forth — and there’s an app repository from which one can download many more. Sound familiar? It even looks a little like an IPhone or Android smartphone user interface.

I dug under the hood a little. The first thing to notice is that the interface is clearly designed for touchscreens. Everything happens with a single click, the elements are well-spaced and there’s generally a “back” button whenever you go into a particular mode. It bears more than a passing resemblance to the IPhone and Android (although there are no gestures or multitouch, perhaps as a concession to the variety of hardware targets).

The second thing to realise is that Jolicloud is really just a skin onto Ubuntu Linux. Underneath there’s a full Linux installation running X Windows, and so it can run any Linux application you care to install, as long as you’re prepared to drop out of the Jolicloud look-and-feel into a more traditional set-up. You can download Wine, the Windows emulator, and run Windows binaries if you wish. (I used this to get a Kindle reader installed.)

If this was all there was to Jolicloud I wouldn’t be excited. What sets it apart is as suggested by the “cloud” part of the name. Jolicloud isn’t intended as an operating system for single devices. Instead it’s intended that everything a user does lives on the web (“in the cloud,” in the more recent parlance — not sure that adds anything…) and is simply accessed from one or more devices running Jolicloud. The start-up screen is actually a browser window (Chrome again) using HTML5 and JavaScript to provide a complete interface. The contents of the start-up screen are mainly hyperlinks to web services: the Facebook “app” just takes you to the Facebook web page, with a few small amendments done browser-side. A small number are links to local applications; most are little more than hyperlinks, or small wrappers around web pages.

The significance of this for users is the slickness it can offer. Installing software is essentially trivial, since most of the “apps” are actually just hyperlinks. That significantly lessens the opportunity for software clashes and installation problems, and the core set of services can be synchronised over the air. Of course you can install locally — I installed Firefox — but it’s more awkward and involves dropping-down a level into the Linux GUI. The tendency will be to migrate services onto web sites and access them through a browser. The Jolicloud app repository encourages this trend: why do things the hard way when you can use a web-based version of a similar service? For data, Jolicloud comes complete with DropBox to store files “in the cloud” as well.

Actually it’s even better than that. The start screen is synced to a Jolicloud account, which means that if you have several Jolicloud devices your data and apps will always be the same, without any action on your part: all your devices will stay synchronised because there’s not really all that much local data to synchronise. If you buy a new machine, everything will migrate automatically. In fact you can run your Jolicloud desktop from any machine running the latest Firefox, Internet Explorer or Chrome browser, because it’s all just HTML and JavaScript: web standards that can run inside any appropriate browser environment. (It greys-out any locally-installed apps.)

I can see this sort of approach being a hit with many people — the same people to whom the IPad appeals, I would guess, who mainly consume media and interact with web sites, don’t ever really need local applications, and get frustrated when they can’t share data that’s on their local disc somewhere. Having a pretty standard OS distro underneath is an added benefit in terms of stability and flexibility. The simplicity is really appealing, and would be a major simplification for elderly people for example, for whom the management of a standard Windows-based PC is a bit of a challenge. (The management of a standard Windows-based PC is completely beyond me, actually, as a Mac and Unix person, but that’s another story.)

But the other thing that Jolicloud demonstrates is both the strength and weaknesses of the IPhone model. The strengths are clear the moment you see the interface: simple, clear, finger-sized and appropriate for touch interaction. The app store model (albeit without payment for Jolicloud) is a major simplification over traditional approaches to maanaging software, and it’s now clear that it works on a “real” computer just as much as on a smartphone. Jolicloud can install Skype, for example, which is a “normal” application although typically viewed by users as some sort of web service. The abstraction of “everything in the cloud” works extremely well. (A more Jolicloud-like skin would make Skype even more attractive, but of course it’s not open-source so that’s difficult.)

Jolicloud also demonstrates that one can have such a model without closing-down the software environment. You don’t have to vet all the applications, or store them centrally, or enforce particular usage and payment models: all those things are commercial choices, not technical ones, and close-down the experience of owning the device they manage. One can perhaps make a case for this for smartphones, but not so much for netbooks, laptops and other computers.

I’m not ready to give up my Macbook Air as my main mobile device, but then again I do more on the move than most people. As just a portable internet connection with a bigger screen than a smartphone it’ll be hard to beat. There are other contenders in the space, of course, but they’re either not really available yet (Google Chrome OS) or limited to particular platforms (WebOS). I’d certainly recommend a look at Jolicloud for anyone in the market for such a system, and that includes a lot of people who might never otherwise consider getting off Windows on a laptop: I’m already working on my mother-in-law.

Once running (after a very fast boot sequence) Jolicloud presents a single-window start-up screen with a selection of apps to select. A lot of the usual suspects are there — a web browser (Google Chrome), Facebook, Twitter, Gmail, a weather application and so forth — and there’s an app repository from which one can download many more. Sound familiar? It even looks a little like an IPhone or Android smartphone user interface.

I dug under the hood a little. The first thing to notice is that the interface is clearly designed for touchscreens. Everything happens with a single click, the elements are well-spaced and there’s generally a “back” button whenever you go into a particular mode. It bears more than a passing resemblance to the IPhone and Android (although there are no gestures or multitouch, perhaps as a concession to the variety of hardware targets).

The second thing to realise is that Jolicloud is really just a skin onto Ubuntu Linux. Underneath there’s a full Linux installation running X Windows, and so it can run any Linux application you care to install, as long as you’re prepared to drop out of the Jolicloud look-and-feel into a more traditional set-up. You can download Wine, the Windows emulator, and run Windows binaries if you wish. (I used this to get a Kindle reader installed.)

If this was all there was to Jolicloud I wouldn’t be excited. What sets it apart is as suggested by the “cloud” part of the name. Jolicloud isn’t intended as an operating system for single devices. Instead it’s intended that everything a user does lives on the web (“in the cloud,” in the more recent parlance — not sure that adds anything…) and is simply accessed from one or more devices running Jolicloud. The start-up screen is actually a browser window (Chrome again) using HTML5 and JavaScript to provide a complete interface. The contents of the start-up screen are mainly hyperlinks to web services: the Facebook “app” just takes you to the Facebook web page, with a few small amendments done browser-side. A small number are links to local applications; most are little more than hyperlinks, or small wrappers around web pages.

The significance of this for users is the slickness it can offer. Installing software is essentially trivial, since most of the “apps” are actually just hyperlinks. That significantly lessens the opportunity for software clashes and installation problems, and the core set of services can be synchronised over the air. Of course you can install locally — I installed Firefox — but it’s more awkward and involves dropping-down a level into the Linux GUI. The tendency will be to migrate services onto web sites and access them through a browser. The Jolicloud app repository encourages this trend: why do things the hard way when you can use a web-based version of a similar service? For data, Jolicloud comes complete with DropBox to store files “in the cloud” as well.

Actually it’s even better than that. The start screen is synced to a Jolicloud account, which means that if you have several Jolicloud devices your data and apps will always be the same, without any action on your part: all your devices will stay synchronised because there’s not really all that much local data to synchronise. If you buy a new machine, everything will migrate automatically. In fact you can run your Jolicloud desktop from any machine running the latest Firefox, Internet Explorer or Chrome browser, because it’s all just HTML and JavaScript: web standards that can run inside any appropriate browser environment. (It greys-out any locally-installed apps.)

I can see this sort of approach being a hit with many people — the same people to whom the IPad appeals, I would guess, who mainly consume media and interact with web sites, don’t ever really need local applications, and get frustrated when they can’t share data that’s on their local disc somewhere. Having a pretty standard OS distro underneath is an added benefit in terms of stability and flexibility. The simplicity is really appealing, and would be a major simplification for elderly people for example, for whom the management of a standard Windows-based PC is a bit of a challenge. (The management of a standard Windows-based PC is completely beyond me, actually, as a Mac and Unix person, but that’s another story.)

But the other thing that Jolicloud demonstrates is both the strength and weaknesses of the IPhone model. The strengths are clear the moment you see the interface: simple, clear, finger-sized and appropriate for touch interaction. The app store model (albeit without payment for Jolicloud) is a major simplification over traditional approaches to maanaging software, and it’s now clear that it works on a “real” computer just as much as on a smartphone. Jolicloud can install Skype, for example, which is a “normal” application although typically viewed by users as some sort of web service. The abstraction of “everything in the cloud” works extremely well. (A more Jolicloud-like skin would make Skype even more attractive, but of course it’s not open-source so that’s difficult.)

Jolicloud also demonstrates that one can have such a model without closing-down the software environment. You don’t have to vet all the applications, or store them centrally, or enforce particular usage and payment models: all those things are commercial choices, not technical ones, and close-down the experience of owning the device they manage. One can perhaps make a case for this for smartphones, but not so much for netbooks, laptops and other computers.

I’m not ready to give up my Macbook Air as my main mobile device, but then again I do more on the move than most people. As just a portable internet connection with a bigger screen than a smartphone it’ll be hard to beat. There are other contenders in the space, of course, but they’re either not really available yet (Google Chrome OS) or limited to particular platforms (WebOS). I’d certainly recommend a look at Jolicloud for anyone in the market for such a system, and that includes a lot of people who might never otherwise consider getting off Windows on a laptop: I’m already working on my mother-in-law.